Description:

The SoundCatcher is an open-air gestural controller designed to control a looper and time-freezing sound patch. It makes use of ultrasonic sensors to measure the distance of the performer’s hands to the device located in a microphone stand. Tactile and visual feedback using a pair of vibrating motors and LEDs are provided to note the performer when he/she is inside the sensed space. In addition, the velocities of the motors velocity are scaled for each hand distance to the microphone stand to provide tactile cues about hand position.

Motivation

Contemporary vocal performance is almost always associated with the use of microphones and amplification. Furthermore, vocal performances in music concerts and recordings are one of the most processed music signals. However, it is very common that the sound engineer is in charge of the audio processing, not allowing singers to augment, process, and control their vocal performance. Hence, SoundCatcher is designed as an open-air gestural controller for singers that allows them to sample their performance, loop and process it in real-time, creating new possibilities for performance and composition in live, rehearsal, and recording contexts.

Details

SoundCatcher is a music performance and compositional controller that allows a musician to sample and process her voice or other audio live input in real-time. In its most basic setup, the gestural interface allows the performer to record and overdub a sound engine’s buffer at any moment, controlling its playback behavior by changing its loop start and end points related to her hand positions to a reference point. In order to provide more than aural feedback, tactile and visual feedback using a pair of vibrating motors and LEDs are provided to inform the performer when she is inside the sensed space. Furthermore, each motor’s speed is scaled for each hand, in relation to its distance to the microphone stand, to provide tactile cues about hand position.

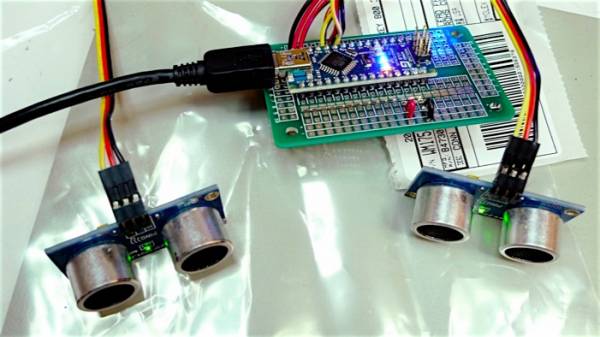

Sensors and gesture acquisition

Ultrasonic sensors were selected to capture hand’s position and movements due to their linear response in the required distance detection range, power requirements, narrow acceptance angle, shape, and low cost. Each of them measure the distance from the microphone stand to the performer’s hand by emitting a short 40 kHz burst. This signal travels through the air hitting the performer’s hand and then bounces back to the sensor. It provides an output pulse that will terminate when the echo is detected, hence the width of this pulse corresponds to the distance to the target. Although each sensor can measure from two centimeters up to three meters, in our configuration the sensed space for each hand is limited to sixty centimetres, allowing the performer the open both arms to a maximum of 1.2 meters, thus avoiding measuring wall reflections and other possible objects close to the perfomer. In addition, a pedal footswitch was implemented to switch the buffer recording in the software side on or off. The data acquired from the sensors is sent to the open-source Arduino platform and handled by its Wiring based language.

Vibrotactile and visual feedback

To provide the performer with cues about the sensed space without requiring a computer screen, two actuators are used to provide vibrotactile feedback. In addition, it is expected that this feedback will provide most of the information needed for expert performance. Given the frequency response requirements for the fingers and the system, two motors were selected to transduce the measured distance to vibrating movement. The voltage signal driving the motors is scaled from the hand to the microphone stand distance. The lower the value, the faster the motors vibrate. If the hands go beyond the sensed space, the motors do not vibrate at all. Two digital pins with pulse wave modulation capability in the Arduino board are used to drive the actuators. Furthermore, the motors are attached to the box device through cables, giving the performer the possibility to use or let them loose, depending on her needs. With this solution, the performer can obtain haptic feedback without losing their mobility.

For performance contexts, in order to provide the audience and the performer additional visual cues, we installed two large LEDs on each side of the box device. With this little theatrical trick, we are giving extra information about what is being sensed, making it easier to relate the performer’s gestures with what is being heard. Thus, the system is helping the performer to communicate with the audience

Sound Processing Engine

The sound processing engine for SoundCatcher is based in the Max/MSP environment using two concurrent approaches to provide a more complex experience for the performer.On one hand, through a time domain approach, a buffer is recorded and its playback start and end points are controlled by the ultrasound sensors. It is always running so if the performer wants to silence it, she must fill it with silence. On the other hand, a frequency domain approach is used to freeze a sound in real-time. This implementation resynthesizes several frames continuously with a stochastic blurring spectral technique allowing smoother transitions and avoiding frame effect artifacts. The patch developed also allows the performer to crossfade between two different portions of audio in a desired time. Using both techniques at the same time, the musician can compose and perform using a looping technique in along time scale, and freezing and crossfading audio frames in a short time scale, both in real-time. To provide an easier experience working with other musicians, sequencers, and digital audio workstations, the buffer loop start and end points can be synchronized to MIDI clocks,thus ensuring a synchronized performance if wanted.

Mapping

Although SoundCatcher was designed to provide the performer straightforward control of both loop points, thus making its use apparently simple, we decided to map the same variables to more parameters in order to give more expressive possibilities, such as the frame blurring level described above, and signal dry/wet ratio for the time-domain looper section. In addition, the skilled use of the footswitch allows the performer to create complex rhythms and melodic structures. Thus, the performer can use the controller in both analytic and holistic ways, being conscious of the control parameters if needed, but being more explorative and integral way if she wants to try more unexpected sounds. Also,it is important to consider that the sound output depends onthe audio signal addressed to the system, so performance mode assumes that the singer needs to explore her vocal expressive possibilities as well as the gestural controller to obtain more artistic results.

Setup

The device is placed in the microphone stand, allowing height changes depending on the singer’s expressive needs and performance convenience. The vibrating motors and footswitch are connected directly to the device, so the entire controller can be considered a one out-of-the-box product. The computer running the software side of SoundCatcher does not need to be on stage. Both LEDs can be adjusted in order to light up the performer’s hands or her face, achieving different expressive and theatrical effects.

Musical Applications

With the current parameter mapping, SoundCatcher can be used to augment vocal performances in several different ways, such as: creating new melodic and rhythm structures, repeating desired audio portions with or without synchronization, freezing and crossfading different audio frames, and creating delay lines. It can be used for composing and/or performance. The device visual feedback through very brilliant LED’s can be used in live contexts to enhance the audience’s understanding of the gestural controller and sound engine behavior. Although the setup was designed for a vocalist, it can be used by other musicians. A first approach to the use of SoundCatcher in a musical performance context can be seen in the following video.

Video:

IDMIL Participants:

Research Areas:

Funding:

- NSERC

Press:

Video demo – https://vimeo.com/8112647