Description:

How much information is there in body gesture during musical performance? Current and previous work at the IDMIL includes the creation of databases of MoCap data from performances of various instruments (violin, viola, cello, clarinet, drums) recorded by student and professional musicians. Motion capture is a very useful tool for conducting movement analysis because it provides highly accurate information about movement and allows for the isolation of movement from other details in a scene.

There are many approaches to examining motion capture data. Examples include the use of functional data analysis (Vines et al 2006), and sonification of movement data (Verfaille et al 2006). This particular project uses machine learning. In particular, it explores the use of different artificial neural network architectures in analysis of motion capture data, towards understanding performer gesture.

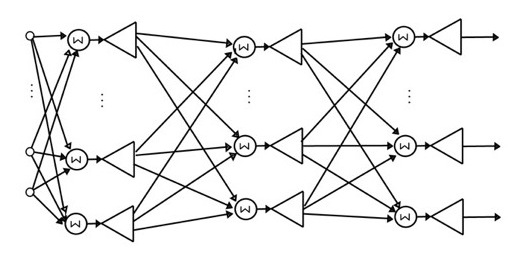

Artificial neural networks are a family of algorithms that learn by iteratively making small adjustments to weighted connections between simple processing units. Currently, this project uses feedforward networks and recurrent networks. These networks are very similar but the recurrent versions allow for feedback so that there is an effect of sequence. That is, the order of the input potentially effects the output in the recurrent network but not in the feedforward network.

Networks are trained to identify instruments based on motion capture data from musicians. Some networks are only trained on subsets of the body data (e.g. only the angles of the hips, knees, and ankles, or only the centre of mass), and still perform well at classifying the instrument when compared with human performance on a similar task.

IDMIL Participants:

Research Areas:

Funding:

- NSERC

Publications:

- Yaremchuk, V., Wanderley, M. M. (2014). Brahms, Bodies and Backpropagation: Artificial Neural Networks for Movement Classification in Musical Performance. In Proceedings of 1st International Conference on Movement Computing (MOCO'14) (pp. 88-93). Paris, France.